This blog post will assist you in upgrading a FIM 2010 environment to MIM 2016. To be clear: FIM 2010, not FIM 2010 R2. Disclaimer: if you “play” around like I do below, make sure you use one, or more, of the following.

-

A test environment

-

SQL Backups

-

VM Snapshots

-

…

Trust me sooner or later they’ll save your life or at least your day. After each attempt I did an SQL restore to be absolutely sure my upgrade path was OK. The installer “touches” the databases pretty quickly even if it fails in the beginning of the process.

The upgrade process is explained on TechNet: Upgrading Forefront Identity Manager 2010 R2 to Microsoft Identity Manager 2016 as well, but the guide is only partially applicable for the scenario I’ve foreseen.

-

No information on upgrading from FIM 2010, only FIM 2010 R2 is mentioned

-

No information on transitioning to a more recent Operating System

-

No information on transitioning to a more recent database platform

In order to clarify I’ll show a topology diagram of our current setup:

Current versions:

Target versions:

I won’t post a target diagram as in our case we decided not to change anything. We intend to upgrade FIM 2010 to MIM2016. However, we also would like to upgrade the various supporting components such as the underlying operating system and the SQL server edition. The TechNet guide shows you what has to be done to perform an in place upgrade of FIM 2010 R2 to MIM 2016. If I were to do an in place upgrade I would end up with MIM 2016 on server 2008 R2. I’d rather not do an in place upgrade of 2008 R2 to 2012 R2. That means I would have to migrate MIM 2016 to another box. Another disadvantage of upgrading in place is that you’ll have downtime during the upgrade. Well eventually you’ll have some downtime, but if you can leave the current environment intact, you can avoid the lengthy restore process if something goes wrong. And what about the database upgrade processes? Depending on your environment that can take quite some time. If you want plan your window for the upgrade, you could follow my approach as a “dry run” with the production data without impacting your current (running) environment! If you’re curious how, read on!

I wanted to determine the required steps to get from current to target with the least amount of hassle. I’ll describe the steps I followed and the issues I encountered:

Upgrading/Transitioning the FIM Synchronization Service: Attempt #1

-

Stop and disable all scheduled tasks that execute run profiles

-

Stop and disable all FIM 2010 services (both Sync and Service)

-

Backup the FIMSynchronization database on the SQL 2008 platform (see note at bottom)

-

Restore the FIMSynchronization database on the SQL 2012 platform

-

Enable SQL Server Service Broker for the FIMSynchronization database (see note at bottom)

-

Transfer the logins used by the database from SQL 2008 to SQL 2012

-

Copy the FIM Synchronization service encryption keys to the Windows 2012 R2 Server

-

Ran the MIM 2016 Synchronization Service MSI on the Windows 2012 R2 server

However that resulted in the following events and concluded with an MSI installation failure:

In words: Error 25009.The Microsoft Identity Manager Synchronization Service setup wizard cannot configure the specified database. Invalid object name 'mms_management_agent'. <hr=0x80230406>

And in the Application Event log:

In words:Conversion of reference attributes started.

In words: Conversion of reference attributes failed.

In words: Product: Microsoft Identity Manager Synchronization Service -- Error 25009.The Microsoft Identity Manager Synchronization Service setup wizard cannot configure the specified database. Invalid object name 'mms_management_agent'. <hr=0x80230406>

The same information was also found in the MSI verbose log. Some googling led me to some fixes regarding SQL access rights or SQL compatibility level. None of which worked for me.

Upgrading/Transitioning the FIM Synchronization Service: Attempt #2

This attempt is mostly the same as the previous. However now I’ll be running the MIM 2016 installer directly on the FIM 2010 Synchronization Server. I’ll save you the trouble: it fails with the exact same error. As a bonus the setup rolls back and leaves you with a server with NO FIM installed.

Upgrading/Transitioning the FIM Synchronization Service: Attempt #3

I’ll provide an overview of the steps again:

-

Stop and disable all scheduled tasks that execute run profiles

-

Stop and disable all FIM 2010 services (both Sync and Service)

-

Backup the FIMSynchronization database on the SQL 2008 platform (see note at bottom)

-

Restore the FIMSynchronization database on the SQL 2012 platform

-

Enable SQL Server Service Broker for the FIMSynchronization database (see note at bottom)

-

Transfer the logins used by the database from SQL 2008 to SQL 2012

-

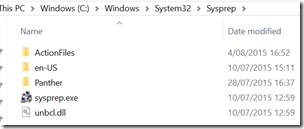

Install a new (temporary) Windows 2012 Server

-

Copy the FIM Synchronization service encryption keys to the Windows 2012 Server

-

Run the FIM 2010 R2 (4.1.2273.0) Synchronization Service MSI on the Windows 2012 server–> Success

-

Stop and disable the FIM Synchronization Service on the Windows 2012 server

-

Copy the FIM Synchronization service encryption keys to the Windows 2012 R2 Server

-

Run the MIM 2016 Synchronization Service MSI on the Windows 2012 R2 server

Again that resulted in several events and concluded with the an MSI installation failure:

In words: Product: Microsoft Identity Manager Synchronization Service -- Error 25009.The Microsoft Identity Manager Synchronization Service setup wizard cannot configure the specified database. Incorrect syntax near 'MERGE'. You may need to set the compatibility level of the current database to a higher value to enable this feature. See help for the SET COMPATIBILITY_LEVEL option of ALTER DATABASE.

Now that’s an error that doesn’t seem to scary. It’s clearly suggesting to raise the database compatibility level so that the MERGE feature is available.

Upgrading/Transitioning the FIM Synchronization Service: Attempt #4 –> Success!

I’ll provide an overview of the steps again:

-

Stop and disable all scheduled tasks that execute run profiles

-

Stop and disable all FIM 2010 services (both Sync and Service)

-

Backup the FIMSynchronization database on the SQL 2008 platform (see note at bottom)

-

Restore the FIMSynchronization database on the SQL 2012 platform

-

Enable SQL Server Service Broker for the FIMSynchronization database (see note at bottom)

-

Transfer the logins used by the database from SQL 2008 to SQL 2012

-

Don’t worry about the SQL Agent Jobs, the MIM Service setup will recreate those

-

Install a new (temporary) Windows 2012 Server

-

Copy the FIM Synchronization service encryption keys to the Windows 2012 Server

-

Run the FIM 2010 R2 (4.1.2273.0) Synchronization Service MSI on the Windows 2012 server

-

Stop and disable the FIM Synchronization Service on the Windows 2012 server

-

Changed the SQL Compatibility Level to 2008 (100) on the database

-

Copy the FIM Synchronization service encryption keys to the Windows 2012 R2 Server

-

Run the MIM 2016 Synchronization Service MSI on the Windows 2012 R2 server –> Success!

Changing the compatibility level can easily be done using the using the SQL Management Studio:

In my case it was on SQL Server 2005 (90) and I changed it to SQL Server 2008 (100). If you prefer doing this through an SQL query:

USE [master]

GO

ALTER DATABASE [FIMSynchronization] SET COMPATIBILITY_LEVEL = 100

GO

Bonus information:

This is the command I ran to install both the FIM 2010 R2 and MIM 2016 Synchronization Instance:

Msiexec /i "Synchronization Service.msi" /qb! STORESERVER=sqlcluster.contoso.com SQLINSTANCE=fimsql SQLDB=FIMSynchronization SERVICEACCOUNT=svcsync SERVICEDOMAIN=CONTOSO SERVICEPASSWORD=PASSWORD GROUPADMINS=CONTOSO\GGFIMSyncSvcAdmins GROUPOPERATORS=CONTOSO\GGFIMSyncSvcOps GROUPACCOUNTJOINERS=CONTOSO\GGFIMSyncSvcJoiners GROUPBROWSE=CONTOSO\GGFIMSyncSvcBrowse GROUPPASSWORDSET=CONTOSO\GGFIMSyncSvcPWReset FIREWALL_CONF=1 ACCEPT_EULA="1" SQMOPTINSETTING="0" /l*v C:\MIM\LOGS\FIMSynchronizationServiceInstallUpgrade.log

No real rocket science here. However, make sure not to run /q but use /qb! as the latter allows popups to be thrown and answered by you. For instance when prompted to provide the encryption keys.

Upgrading/Transitioning the FIM Service: Attempt #1 –> Success!

Now to be honest, the upgrade I feared the most proved to be the easiest. From past FIM experiences I know the FIM Service comes with a DB upgrade utility. The setup runs this for you. I figured: why on earth would they throw away the information to upgrade from FIM 2010 to FIM 2010 R2 and cripple the tool so that it can only upgrade FIM 2010 R2 to MIM 2016?! And indeed, they did not! Here’s the steps I took to upgrade my FIM Portal & Service:

-

Stop and disable all scheduled tasks that execute run profiles => this was already the case

-

Stop and disable all FIM 2010 services (both Sync and Service) => this was already the case

-

Backup the FIMService database on the SQL 2008 platform (see note at bottom)

-

Restore the FIMService database on the SQL 2012 platform

-

Enable SQL Server Service Broker for the FIMService database (see note at bottom)

-

Transfer the logins used by the database from SQL 2008 to SQL 2012

-

Installed a Standalone Sharepoint 2013 Foundation SP2

-

Run the MIM 2016 Service and Portal MSI on the Windows 2012 R2 server –> Success!

-

Note: the compatibility level was raised to 2008 (100) by the setup

One thing that assured me the FIM Service database was upgrade successfully was the database upgrade log. The following event indicates where you can find it:

The path: c:\Program Files\Microsoft Forefront Identity Manager\2010\Service\Microsoft.IdentityManagement.DatabaseUpgrade_tracelog.txt An extract:

Database upgrade : Started.

Database upgrade : Starting command line parsing.

Database upgrade : Completed commandline parsing.

Database upgrade : Connection string is : Data Source=sqlcluster.contoso.com\fimsql;Initial Catalog=FIMService;Integrated Security=SSPI;Pooling=true;Connection Timeout=225.

Database upgrade : Trying to connect to database server.

Database upgrade : Succesfully connected to database server.

Database upgrade : Setting the database version to -1.

Database upgrade : Starting database schema upgrade.

Schema upgrade: Starting schema upgrade

Schema upgrade : Upgrading FIM database from version: 20 to the latest version.

Schema upgrade : Starting schema upgrade from version 20 to 21.

...

Database upgrade : Out-of-box object upgrade completed.

Database ugrade : Completed successfully.

Database upgrade : Database version upgraded from: 20 to: 2004

The AppDomain's parent process is exiting.

You can clearly see that the datbase upgrade utility intelligently detects the current (FIM 2010) schema and upgrades all the way to the MIM 2016 database schema.

Bonus information

This is the command I ran to install the MIM 2016 Portal and Service. Password reset/registration portals are not deployed, no reporting and no PIM components. If you just want to test your FIM Service database upgrade, you can even get away with only installing the CommonServices component.

Msiexec /i "Service and Portal.msi" /qb! ADDLOCAL=CommonServices,WebPortals SQLSERVER_SERVER=sqlcluster.contoso.com\fimsql SQLSERVER_DATABASE=FIMService EXISTINGDATABASE=1 SERVICE_ACCOUNT_NAME=svcfim SERVICE_ACCOUNT_DOMAIN=CONTOSO SERVICE_ACCOUNT_PASSWORD=PASSWORD SERVICE_ACCOUNT_EMAIL=svcfim@contoso.com MAIL_SERVER=mail.contoso.com MAIL_SERVER_USE_SSL=1 MAIL_SERVER_IS_EXCHANGE=1 POLL_EXCHANGE_ENABLED=1 SYNCHRONIZATION_SERVER=fimsync.contoso.com SYNCHRONIZATION_SERVER_ACCOUNT=CONTOSO\svcfimma SERVICEADDRESS=fimsvc.contoso.com FIREWALL_CONF=1 SHAREPOINT_URL=http://idm.contoso.com SHAREPOINTUSERS_CONF=1 ACCEPT_EULA=1 FIREWALL_CONF=1 SQMOPTINSETTING=0 /l*v c:\MIM\LOGS\FIMServiceAndPortalsInstall.log

Note: SQL Management Studio Database Backup

Something I learned in the past year or so: whenever taking an “ad hoc” SQL backup, make sure to check the “Copy-only backup” box. That way you won’t interfere with the regular backups that have been configured by your DBA/Backup Admin.

Note: SQL Server Service Broker

Lately I’ve seen cases where applications are unhappy due to the fact that the SQL Server Broker Service is disabled for their database. In my case it was an ADFS setup. But here’s an (older) example for FIM: http://justanothertechguy.blogspot.be/2012/11/fim-2010-unable-to-start-fim-service.html Typically a database that is restored from an SQL backup has this feature disabled. I checked the SQL Server Broker Service for the FIM databases on the SQL 2008 platform and it was enabled. I checked on my SQL 2012 where I did the restore and I could see it was off. Here’s some relevant commands:

Checking whether it’s on for your database:

SELECT is_broker_enabled FROM sys.databases WHERE name = 'FIMSYnchronization';

Enable:

ALTER DATABASE FIMSYnchronization SET ENABLE_BROKER WITH NO_WAIT

If issues arise you can try this one: I’m not an SQL guy. All I can guess it handles the thing less gracefully:

ALTER DATABASE FIMSYnchronization SET ENABLE_BROKER WITH ROLLBACK IMMEDIATE;

And now it’s on:

Remark

I didn’t went all to deep on certain details, if you feel something is unclear, post a comment and I’ll see if I can add information where needed. The above doesn’t describe how to install the second FIM Portal/Service server or the standby MIM Synchronization Server. I expect the instructions to be fairly simple as the database is already on the correct level. If I encounter issues you can expect a post on that well. To conclude: there’s more to do than just running the MIM installers and be done with this. You’ll have to transfer your customizations (like custom workflow DLLs) as well. FIM/MIM Synchronization extensions are transferred for you, but be sure to test everything! Don’t assume! Happy upgrading!

Conclusion

The FIM 2010 Portal and Service can be upgraded to MIM 2016 without the need for a FIM 2010 R2 intermediate upgrade. The FIM 2010 Synchronization Service could not be upgraded directly to MIM 2016. This could be tied to something specific in our environment, or it could be common…

Update #1 (12/08/2015)

Someone prompted me if the FIM can be upgraded without providing the FIM/MIM Synchronization service encryption keys. Obviously it can not. That part has not changed. Whenever you install FIM/MIM on a new box and you point it to an existing database, it will prompt for the key file. I’ve added some “copy keys” steps in my process so that you have them ready when prompted for by the MSI.

0 comments